Care about RPO’s and RTO’s? Then you should be backing up your Windows Azure SQL Databases(Formerly SQLAzure).

Windows Azure SQL Database is highly available and data is redundant(3 copies of your database is stored elsewhere),however that doesn’t help you to recover from the below situation –

“Hey DBA, I deleted few records from the database accidently !!! Can you please recover the database for me ? “

You definitely need a backup of the database to recover from this situation.

One of the assumptions which I normally hear while talking about Windows Azure SQL Database is that you don’t need to backup your databases and Microsoft takes care of it under the hood ! This is wrong, and you should do it in-case you have a need to tackle situations like what was mentioned above.

You can either do a manual export of your database to the storage account or you can schedule the exports(New Update, Scroll down for details).This exported copy can be used to do the restores(Imports).

The import options are really limited. You cannot do operations like overwriting(Replace) a database etc. I’m really confident that Azure will reach that point pretty soon.

In this post we will see how the manual export process works and will also see how we can import an exported database back.

When doing this manually its always a good idea to get a transactionally consistent backup copies. For this purpose we will need to copy the database to the same server or to a different server. In this post we will do a copy to the same server.

So, we have a database called WASDRocks with a table named ChildTable. The table as 2 records as shown below

We will now do a database copy to the same server using command

CREATE DATABASE WASDROCKS_Copy AS COPY OF [WASDROCKS]

There you go, we have the new database ready now which is a transactionally consistent copy.

We will now export the WASDROCKS_Copy and keep it safe under our storage account. Export option is available right below the database selection.

Storage account needs to be selected along with container details and once the credentials are entered correctly(Real time check of passwords !!!) the .BACPAC will be available.

Great, so now we have a transactionally consistent database backup. We can drop this database to avoid additional costs(Yes,its billed)

Now, lets’ do some deletes !!! We will delete a record from the ChildTable

We can recover this data using the backup which we had taken earlier. All we need to do is an Import

Note – In a real world situation be very careful about your RPO values. You might have to increase or decrease the number of exported copies to achieve your SLA. More number of exported copies means, more cost overheads for the storage.

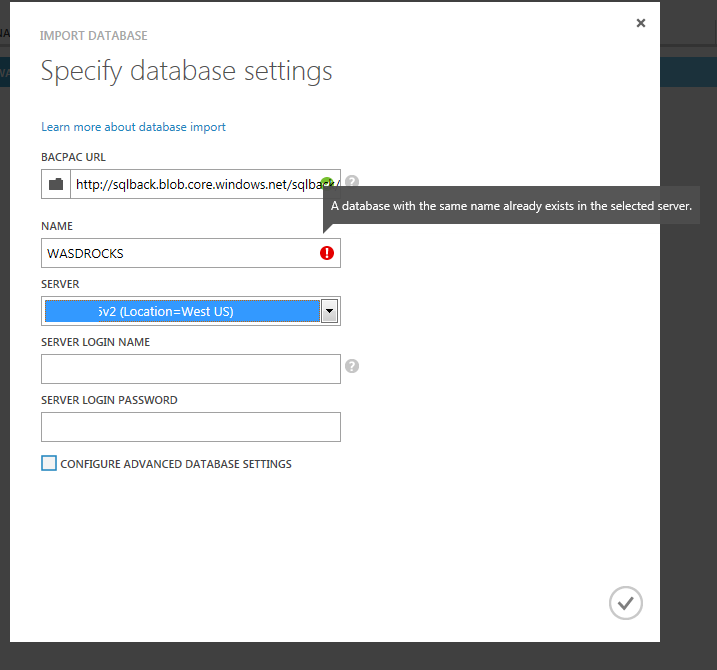

If you try to overwrite the database by giving the same database,ie WASDROCKS is our case,then there will be an error.

This clearly states the limitations of import which we talked about earlier. You cannot overwrite an existing database.

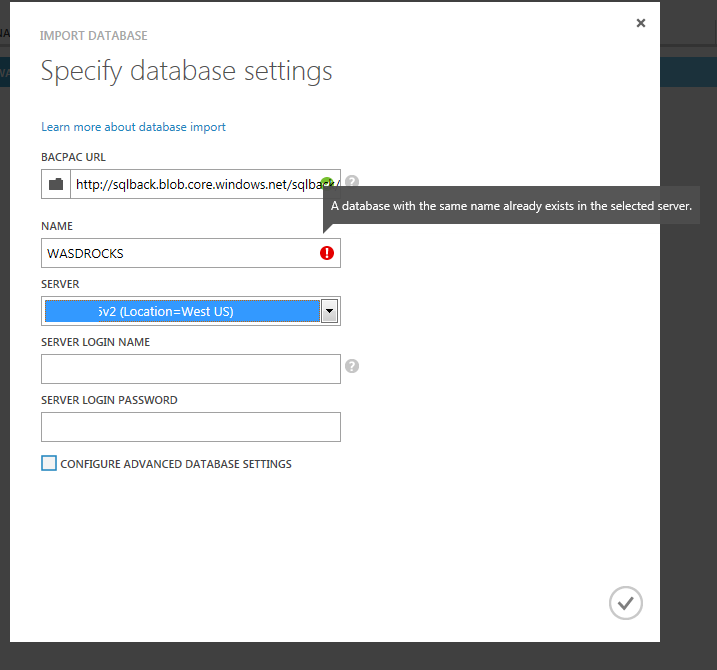

We will import the backup copy as a different database named WASDROCKS_Recovery.

There you go, the recovered database is ready for use now.

If we connect to the recovered database and check for the table, then we can find the details of the deleted row.

Yes, this is not something which is really flexible to do point -in-time restores,but it works just fine. What really matters is your RPO/RTO numbers and you can plan your exports according to that need.

Is there a way to schedule exports, so that manual intervention is limited ?

Yes,this is what I really love about Windows Azure Team. They are really aggressive and they keep adding features at a great pace.

Automated Database Exports was related last month and please refer this blog post by Scott Guthrie for more details.

Keeping backing up your databases and do random restores to ensure that they are good.

Thanks for reading and keep watching this space for more !