Page Verify = Checksum is one option which I like all my servers to be compliant. By default all the new databases which gets created will have page verify = checksum as this option is inherited from the model database.

NOTE – APPLICABLE FROM SQL2005 AND ABOVE.

SELECT name,page_verify_option_desc FROM sys.databases

WHERE database_id=3

name page_verify_option_desc

======= ==================

model CHECKSUM

So far so good. But what will happen if we restore a database from SQL 2000 or even restore a database which is having a different page verify option other than checksum.

Lets check that out –

For the demo purpose on Server A we already have a database named Check_Page_Verify which is having a different page_verify option set as NONE(Bad idea !).

****Server A****

--Check the page_verify option of the db

SELECT name,page_verify_option_desc FROM sys.databases

WHERE database_id=6

name page_verify_option_desc

===== ==================

Check_PageVerify NONE

We will back this database up and will restore it in Server B.

****Server A****

--Backup the database

BACKUP DATABASE Check_PageVerify

TO DISK='I:\Check_PageVerify.bak'

****Server B****

--RESTORE the database

RESTORE DATABASE Check_PageVerify

FROM DISK='I:\Check_PageVerify.bak'

WITH MOVE 'Check_PageVerify' TO 'D:\Check_PageVerify.mdf',

MOVE 'Check_PageVerify_log' TO 'D:\Check_PageVerify_log.LDF'

“Data file and log file in the same drive ! (Bad idea),however as this is a demo we can ignore this.”

In Server B lets check the page_verify option

****Server B****

--Check the page_verify option of the db

SELECT name,page_verify_option_desc FROM sys.databases

WHERE database_id=6

name page_verify_option_desc

===== ==================

Check_PageVerify NONE

I started this post by saying Page Verify = Checksum is one option which I like all my servers to be compliant. Now after this restore my server is not complaint anymore for page verify. I will never come to know about this miss until and unless I regularly do compliance checks.

So how do we do that compliance checks ? This is where Policy Based Management (PBM) comes into picture.

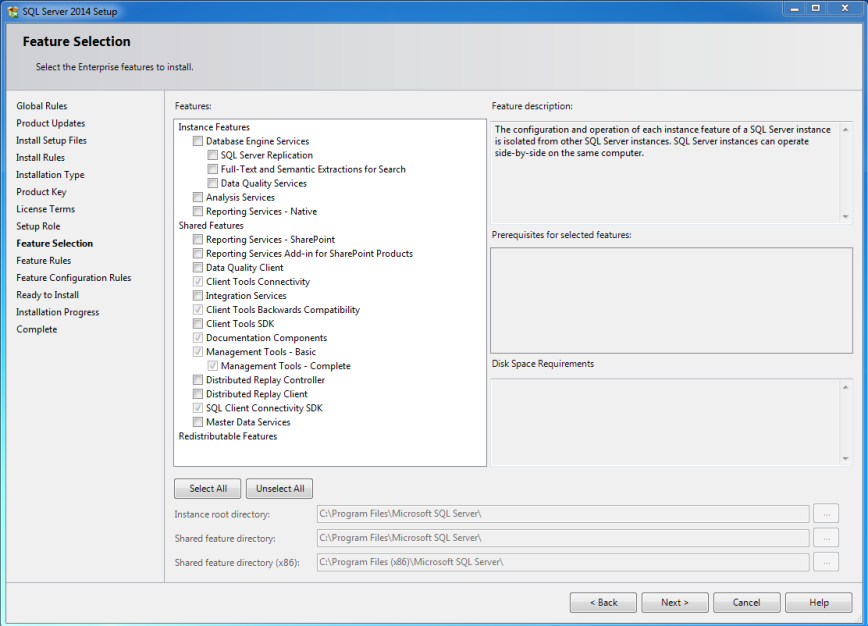

We will need to create a condition first using the wizard.

I’m using facet ‘Database options’ for this demo and added the expression @pageverify= checksum.

Now, lets create a policy for this condition.

When creating the policy we will need to choose the condition which we created earlier. The evaluation mode for this policy are of 3 types

1. On demand 2.On schedule and 3.On change Log only.

To know more about PBM concepts please ref http://technet.microsoft.com/en-us/library/bb510667.aspx

We are picking On schedule for this demo. This option needs a schedule to be created. We will create a schedule which will run at 12 AM every Sunday.

Once the schedule is created we need to ensure that policy is enabled.

The idea is to have the schedule run every Sunday to check if there are any databases which are violating the rule of page verify = checksum and on Monday morning when we come to work we will just need to check history of the policy to see if there are any violations.

For the demo purpose, lets trigger the schedule to run manually.

This is the job which was created automatically for the schedule which we created earlier.

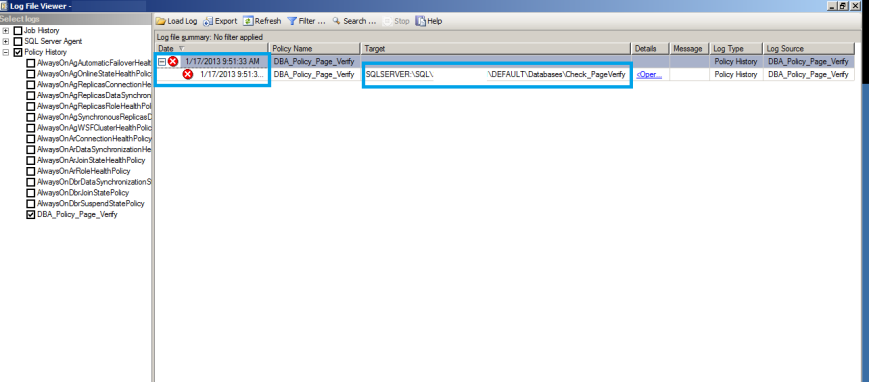

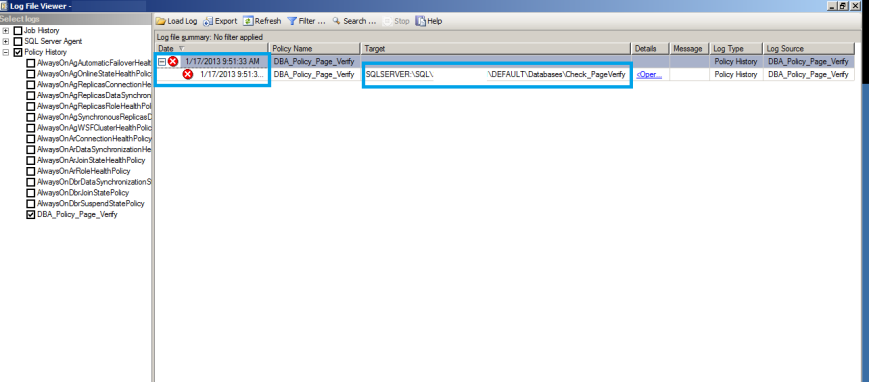

After running the job successfully, all we need to do is check the history of the policy

There you go, we got the first violation details of the policy

It clearly says database Check_PageVerify is not compliant for the policy which we created.

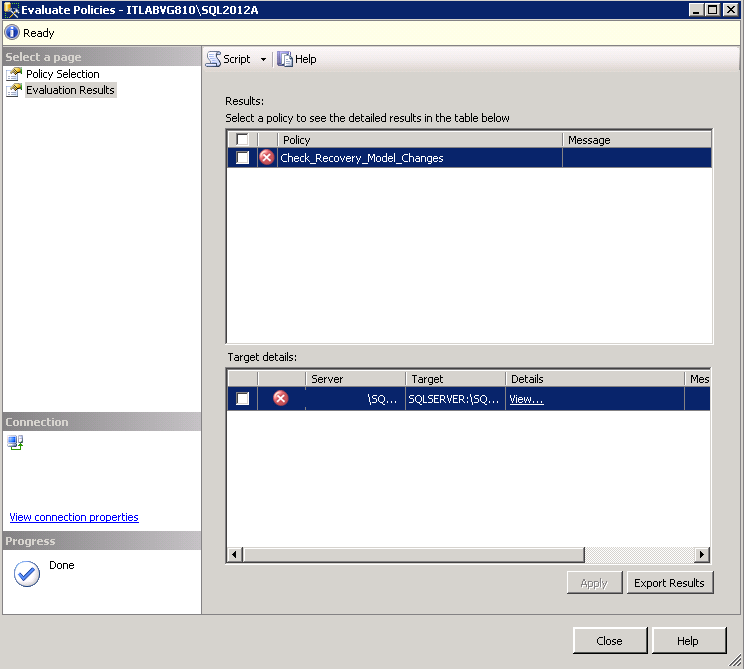

If you have multiple databases which are not compliant, then you have the option to evaluate the policy manually ,choose the one which are not complaint and force them to be complaint.

Great, now we have everything under control and all the databases are compliant.

Conclusion

There are great things which we can do with PBM and if you have a better way to achieve this using PBM(Alternate facets,condition etc) or any other methods, then I’m eager to hear from you.

Keep watching this space for more.

Thanks for reading.